Evaluation Metrics¶

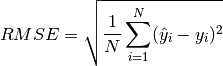

RMSE¶

Root Mean Square Error (RMSE) is a frequently used measure of the differences between values (sample or population values) predicted by a model or an estimator and values observed, and its formula is given as follows:

where  denotes the value predicted by a model,

denotes the value predicted by a model,  denotes the observed values.

denotes the observed values.

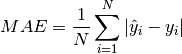

MAE¶

Mean Absolute Error (MAE) is a measure of errors between paired observations expressing the same phenomenon.

where  denotes the value predicted by a model,

denotes the value predicted by a model,  denotes the observed value.

denotes the observed value.

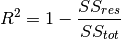

R-squared¶

R-Squared ( ) also known as coefficient of determination, is usually used in the context of statistical models whose main purpose is either the prediction of future outcomes or the testing of hypotheses, on the basis of other related information.

) also known as coefficient of determination, is usually used in the context of statistical models whose main purpose is either the prediction of future outcomes or the testing of hypotheses, on the basis of other related information.  is usually determined by the total sum of squares (denoted as

is usually determined by the total sum of squares (denoted as  ), and the residual sum of squares (denoted as

), and the residual sum of squares (denoted as  ), and its formula is as follows:

), and its formula is as follows:

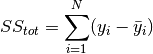

is calculated as follows:

is calculated as follows:

where  denotes the observed value,

denotes the observed value,  denotes the average values of all observed values.

denotes the average values of all observed values.

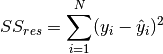

is calculated as follows:

is calculated as follows:

where  denotes the observed value,

denotes the observed value,  denotes the predicted value.

denotes the predicted value.

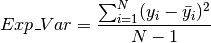

Explained Variance¶

Explained Variance is used to measure the discrepancy between a model and actual data. Its formula is given as follows:

where  denotes the observed value,

denotes the observed value,  denotes the predicted value.

denotes the predicted value.

AUC¶

Area Under Curve (AUC) usually used in classification analysis in order to determine which of the used models predicts the classes best. In recommender systems, AUC is usually used to metric for implicit feedback type recommender, where rating is binary and prediction is float number ranging from 0 to 1. For more details, you can refer to Wikipedia.

LogLoss¶

Log Loss also known as Cross-Entropy Loss, its discrete formal is given as follows:

![LogLoss=-\frac{1}{N}\sum_{n=1}^{N}[y_n\log\hat{y_n}+(1-y_n)\log(1-\hat{y_n})]](../_images/math/623aaa79609ddb7edb3b74f5fda810a3e28d6778.png)

where  represents whether the predicted result is positive.

represents whether the predicted result is positive.